はじめに

下記では、ラズパイ3台でコントロールプレーン・ワーカーを含むクラスタを構築しました。

また、下記にHA(High Availability)構成についてまとめました。

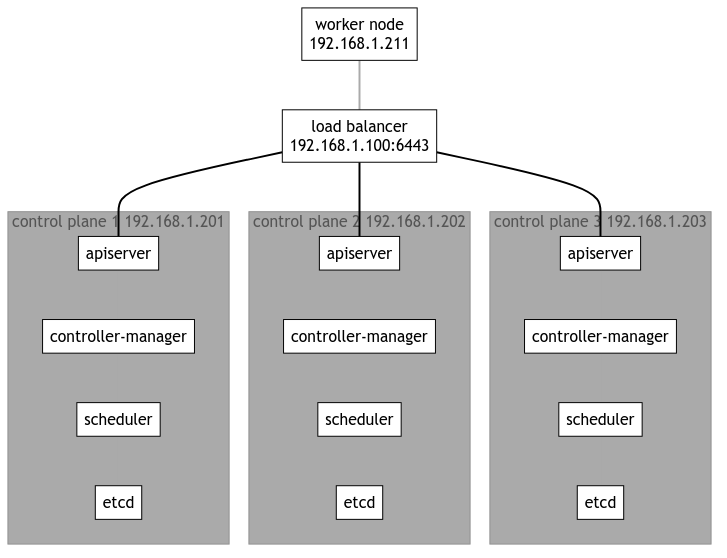

次のステップとして、ラズパイ3台でコントロールプレーンを構成するHA(High availability) おうちKubernetesクラスター構築に取り組みます。

ワーカーについてはx86(amd64)のPCを1台を使用します。 Kubernetesのクラスター構築方法を理解する上では、ラズパイ3台での構築は非常に有効であると感じましたが、 実際になんらかのアプリケーションをデプロイすることを試していくとなると、公開されているDockerイメージの数の上で、より多くのアプリケーションを使用することができるx86アーキのマシンをワーカーとして使用することが有効であると思います。 (もちろんKubernetesをラズパイ/ARMで使用することが目的であればラズパイで問題ないと思います。)

本来ワーカーノードには最低3台必要ですが、今回の構成では1台設けることで半HA構成とします。 半HA構成とは言っても、今後このままワーカーノードを増やしていくことができますので、構築方法としては本来のHA構成と同等となります。 今後、できればワーカーノードを増やしたいと考えています。

なお、HA構成に対してはコントロールプレーンのノードを選択的に使用するため、ロードバランサー(load balancer:LB)が必要となります。

主に参考にしたページ

主に下記を参考にして作業を行いました。

全体構成

今回構築するのは下記のような構成となります。 (上記の公式ページの図を参考にしています)

ユーザーは ロードバランサー(192.168.1.100:6443) にアクセスする(kubectrlを実行する)ことになります。

3台のラズパイと1台のPC、OpenWrtをインストールした1台のルーターを使用します。

- 192.168.1.100 OpenWrt (BUFFALO WZR-1750DHP)

- 192.168.1.201 k8s-ctrl1 (RaspberryPi 4B 8GB RAM)

- 192.168.1.202 k8s-ctrl2 (RaspberryPi 4B 8GB RAM)

- 192.168.1.203 k8s-ctrl3 (RaspberryPi 4B 8GB RAM)

- 192.168.1.211 k8s-worker1 (ASUS Chromebox3 i7-8550U 16GB RAM, 256GB NVMe SSD)

ソフトウェアのバージョン

- Ubuntu 22.04

- Kubernetes v1.29 (v1.29.4-2.1)

- Calico v3.27.0

ロードバランサー

OpenWrtのパッケージに haproxy がありますので、Buffaloの小型ルーターにOpenWrtをインストールし、これをロードバランサーとして使用します。 ロードバランサーのIPアドレスは 192.168.1.100 とします。 下記の通り、ルーターにOpenWrtをインストールし、opkgにてhaproxyをインストール、設定しました。

いくつかの参考文献においては、haproxyとkeepalivedを組み合わせて構築する方法がありましたが、これは選択せず、より単純なhaproxyのみを使用する構成を選択しました。

keepalivedを使用する目的は、ロードバランサー自体の冗長構成であると理解しています。 公式ページにも言及がありますが、ロードバランサーの構成方法はKubernetes自体には直接関係がありません。 私の使用目的はKubernetesのキャッチアップ、理解が中心ですので、シンプルなhaproxyのみの構成で十分であると判断しました。 一方で、今回の小型ルーター + OpenWrt を使用する場合には比較的安定して動作することが期待できると考えています。

コントロールプレーン

- ハードウェアのセットアップ

- OSのインストール

- OS基本設定

- containerd・kubernetesのインストール

下記と同様に、マシンを設定します。

hostnamectl set-hostname k8s-ctrl1 hostnamectl set-hostname k8s-ctrl2 hostnamectl set-hostname k8s-ctrl3

なお、/etc/hosts については下記の通り設定しました。

cat << _EOF_ | sudo tee -a /etc/hosts 192.168.1.201 k8s-ctrl1 192.168.1.202 k8s-ctrl2 192.168.1.203 k8s-ctrl3 192.168.1.211 k8s-worker1 _EOF_

ワーカー

ワーカーとなるマシンを下記の通りセットアップします。

- ハードウェアのセットアップ

- OSのインストール

- OS基本設定

- containerd・kubernetesのインストール

マシンの選定や具体的な設定方法については下記に記載しています。

kubeadm init(最初のコントロールプレーン向け)

kubeadm initの実行

k8s-ctrl1 で実行します。 ここで、–control-plane-endpoint ではロードバランサーのIPアドレスを指定します。

$ sudo kubeadm init --control-plane-endpoint "192.168.1.100:6443" --upload-certs

kubeadm initの結果

kubeadm initの結果で次に行うべき内容が示されますので、必ずこれを控えておきます。

- クラスターを使い始めるための設定

- podネットワーク(CNI)のdeployについて

- 他のコントロールプレーンノードの参加方法

- 証明書(upload-certs)が期限切れになった場合の対応方法

- ワーカーノードの参加方法

wurly@k8s-ctrl1:~$ sudo kubeadm init --control-plane-endpoint "192.168.1.100:6443" --upload-certs I0506 22:26:35.713265 1438 version.go:256] remote version is much newer: v1.30.0; falling back to: stable-1.29 [init] Using Kubernetes version: v1.29.4 [preflight] Running pre-flight checks [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' W0506 22:26:36.858967 1438 checks.go:835] detected that the sandbox image "registry.k8s.io/pause:3.6" of the container runtime is inconsistent with that used by kubeadm. It is recommended that using "re gistry.k8s.io/pause:3.9" as the CRI sandbox image. [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "ca" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [k8s-ctrl1 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.1.201 192.168.1.100] [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "front-proxy-ca" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Generating "etcd/ca" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [k8s-ctrl1 localhost] and IPs [192.168.1.201 127.0.0.1 ::1] [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [k8s-ctrl1 localhost] and IPs [192.168.1.201 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "sa" key and public key [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "super-admin.conf" kubeconfig file [kubeconfig] Writing "kubelet.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests" [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" [control-plane] Creating static Pod manifest for "kube-scheduler" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Starting the kubelet [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s [apiclient] All control plane components are healthy after 18.544814 seconds [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster [upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace [upload-certs] Using certificate key: cf6b62a26809ce3e4126c782badb0853e02d97dab46f90d7e895dd96ac1b3a1d [mark-control-plane] Marking the node k8s-ctrl1 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers] [mark-control-plane] Marking the node k8s-ctrl1 as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule] [bootstrap-token] Using token: hdie35.u9airq6ychkt8amq [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace [kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of the control-plane node running the following command on each as root: kubeadm join 192.168.1.100:6443 --token hdie35.u9airq6ychkt8amq \ --discovery-token-ca-cert-hash sha256:cd58094931470815be7e0b791357ce4ca6907cb861858915e17752baa6cfc18a \ --control-plane --certificate-key cf6b62a26809ce3e4126c782badb0853e02d97dab46f90d7e895dd96ac1b3a1d Please note that the certificate-key gives access to cluster sensitive data, keep it secret! As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use "kubeadm init phase upload-certs --upload-certs" to reload certs afterward. Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.1.100:6443 --token hdie35.u9airq6ychkt8amq \ --discovery-token-ca-cert-hash sha256:cd58094931470815be7e0b791357ce4ca6907cb861858915e17752baa6cfc18a

クラスターを使い始めるための設定

まずは指示通り(k8s-ctrl1上で)こちらを実行します。

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubectrlが実行できることを確認します。 この時点では、corednsはPending状態になっています。

wurly@k8s-ctrl1:~$ kubectl get pod -n kube-system -w NAME READY STATUS RESTARTS AGE coredns-76f75df574-mzng7 0/1 Pending 0 4m9s coredns-76f75df574-t265x 0/1 Pending 0 4m9s etcd-k8s-ctrl1 1/1 Running 0 4m13s kube-apiserver-k8s-ctrl1 1/1 Running 0 4m18s kube-controller-manager-k8s-ctrl1 1/1 Running 0 4m13s kube-proxy-d64kt 1/1 Running 0 4m9s kube-scheduler-k8s-ctrl1 1/1 Running 0 4m13s

CNI(Calico)のインストール

Kubernetes v1.29 に合わせ、下記の記載を確認し、Calico v3.27 を使用します。

We test Calico v3.27 against the following Kubernetes versions. Other versions may work, but we are not actively testing them.

- v1.27

- v1.28

- v1.29

下記コマンドでデプロイできます。

kubectl apply -f https://raw.githubusercontent.com/projectcalico/calico/v3.27.0/manifests/calico.yaml

下記が実行結果です。

wurly@k8s-ctrl1:~$ kubectl apply -f https://raw.githubusercontent.com/projectcalico/calico/v3.27.0/manifests/calico.yaml poddisruptionbudget.policy/calico-kube-controllers created serviceaccount/calico-kube-controllers created serviceaccount/calico-node created serviceaccount/calico-cni-plugin created configmap/calico-config created customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/bgpfilters.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created clusterrole.rbac.authorization.k8s.io/calico-node created clusterrole.rbac.authorization.k8s.io/calico-cni-plugin created clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created clusterrolebinding.rbac.authorization.k8s.io/calico-node created clusterrolebinding.rbac.authorization.k8s.io/calico-cni-plugin created daemonset.apps/calico-node created deployment.apps/calico-kube-controllers created

calico、corednsのコンテナが生成され始めます。

wurly@k8s-ctrl1:~$ kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-5fc7d6cf67-qn5xn 0/1 ContainerCreating 0 61s calico-node-zz6c7 0/1 Init:2/3 0 61s coredns-76f75df574-mzng7 0/1 ContainerCreating 0 10m coredns-76f75df574-t265x 0/1 ContainerCreating 0 10m etcd-k8s-ctrl1 1/1 Running 0 10m kube-apiserver-k8s-ctrl1 1/1 Running 0 10m kube-controller-manager-k8s-ctrl1 1/1 Running 0 10m kube-proxy-d64kt 1/1 Running 0 10m kube-scheduler-k8s-ctrl1 1/1 Running 0 10m

calico-kube-controllers と coredns が ContainerCreating のままの現象

しかし、いつまで経ってもcalico-kube-controllers と coredns が ContainerCreating のままでした。

wurly@k8s-ctrl1:~$ kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-5fc7d6cf67-qn5xn 0/1 ContainerCreating 0 6m26s calico-node-zz6c7 1/1 Running 0 6m26s coredns-76f75df574-mzng7 0/1 ContainerCreating 0 15m coredns-76f75df574-t265x 0/1 ContainerCreating 0 15m etcd-k8s-ctrl1 1/1 Running 0 15m kube-apiserver-k8s-ctrl1 1/1 Running 0 15m kube-controller-manager-k8s-ctrl1 1/1 Running 1 (4m42s ago) 15m kube-proxy-d64kt 1/1 Running 0 15m kube-scheduler-k8s-ctrl1 1/1 Running 1 (4m40s ago) 15m

$ kubectl describe pod calico-kube-controllers-5fc7d6cf67-t24hh

(略) Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 16m default-scheduler Successfully assigned kube-system/cali Warning FailedCreatePodSandBox 16m kubelet Failed to create pod sandbox: rpc erroed (add): failed to create host netlink handle: protocol not supported Warning FailedCreatePodSandBox 16m kubelet Failed to create pod sandbox: rpc erroed (add): failed to create host netlink handle: protocol not supported Warning FailedCreatePodSandBox 16m kubelet Failed to create pod sandbox: rpc erroed (add): failed to create host netlink handle: protocol not supported Warning FailedCreatePodSandBox 16m kubelet Failed to create pod sandbox: rpc erroed (add): failed to create host netlink handle: protocol not supported Warning FailedCreatePodSandBox 16m kubelet Failed to create pod sandbox: rpc erroed (add): failed to create host netlink handle: protocol not supported Warning FailedCreatePodSandBox 15m kubelet Failed to create pod sandbox: rpc erroed (add): failed to create host netlink handle: protocol not supported Warning FailedCreatePodSandBox 15m kubelet Failed to create pod sandbox: rpc erroed (add): failed to create host netlink handle: protocol not supported Warning FailedCreatePodSandBox 15m kubelet Failed to create pod sandbox: rpc erroed (add): failed to create host netlink handle: protocol not supported Warning FailedCreatePodSandBox 15m kubelet Failed to create pod sandbox: rpc erroed (add): failed to create host netlink handle: protocol not supported Warning FailedCreatePodSandBox 98s (x58 over 14m) kubelet (combined from similar events): Failed8a69": plugin type="calico" failed (add): failed to create host netlink handle: protocol not supported

下記の要因でcreateできていない模様です。

Failed to create pod sandbox: rpc erroed (add): failed to create host netlink handle: protocol not supported

calicoが立ち上がらない現象への対処(linux-modules-extra-raspiのインストール)

上記メッセージでググってみたところ、下記があやしそうです。

I have been chasing down this issue on my 7 node stack. Not sure if you got the same problem but i never got any containers up. Found out that in Ubuntu 21.10 i had to install sudo apt install linux-modules-extra-raspi after stop and start it came up and working! 🙂

試しに、linux-modules-extra-raspi をインストールしてみたところ・・・

wurly@k8s-ctrl1:~$ sudo apt install linux-modules-extra-raspi

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

The following additional packages will be installed:

linux-modules-extra-5.15.0-1053-raspi

The following NEW packages will be installed:

linux-modules-extra-5.15.0-1053-raspi linux-modules-extra-raspi

0 upgraded, 2 newly installed, 0 to remove and 0 not upgraded.

Need to get 19.7 MB of archives.

After this operation, 98.6 MB of additional disk space will be used.

Do you want to continue? [Y/n] y

Get:1 http://ports.ubuntu.com/ubuntu-ports jammy-updates/main arm64 linux-modules-extra-5.15.0-1053-raspi arm64 5.15.0-1053.56 [19.7 MB]

Get:2 http://ports.ubuntu.com/ubuntu-ports jammy-updates/main arm64 linux-modules-extra-raspi arm64 5.15.0.1053.50 [2390 B]

Fetched 19.7 MB in 9s (2091 kB/s)

Selecting previously unselected package linux-modules-extra-5.15.0-1053-raspi.

(Reading database ... 102389 files and directories currently installed.)

Preparing to unpack .../linux-modules-extra-5.15.0-1053-raspi_5.15.0-1053.56_arm64.deb ...

Unpacking linux-modules-extra-5.15.0-1053-raspi (5.15.0-1053.56) ...

Selecting previously unselected package linux-modules-extra-raspi.

Preparing to unpack .../linux-modules-extra-raspi_5.15.0.1053.50_arm64.deb ...

Unpacking linux-modules-extra-raspi (5.15.0.1053.50) ...

Setting up linux-modules-extra-5.15.0-1053-raspi (5.15.0-1053.56) ...

Setting up linux-modules-extra-raspi (5.15.0.1053.50) ...

Processing triggers for linux-image-5.15.0-1053-raspi (5.15.0-1053.56) ...

/etc/kernel/postinst.d/initramfs-tools:

update-initramfs: Generating /boot/initrd.img-5.15.0-1053-raspi

Using DTB: bcm2711-rpi-4-b.dtb

Installing /lib/firmware/5.15.0-1053-raspi/device-tree/broadcom/bcm2711-rpi-4-b.dtb into /boot/dtbs/5.15.0-1053-raspi/./bcm2711-rpi-4-b.dtb

Taking backup of bcm2711-rpi-4-b.dtb.

Installing new bcm2711-rpi-4-b.dtb.

flash-kernel: deferring update (trigger activated)

/etc/kernel/postinst.d/zz-flash-kernel:

Using DTB: bcm2711-rpi-4-b.dtb

Installing /lib/firmware/5.15.0-1053-raspi/device-tree/broadcom/bcm2711-rpi-4-b.dtb into /boot/dtbs/5.15.0-1053-raspi/./bcm2711-rpi-4-b.dtb

Taking backup of bcm2711-rpi-4-b.dtb.

Installing new bcm2711-rpi-4-b.dtb.

flash-kernel: deferring update (trigger activated)

Processing triggers for flash-kernel (3.104ubuntu20) ...

Using DTB: bcm2711-rpi-4-b.dtb

Installing /lib/firmware/5.15.0-1053-raspi/device-tree/broadcom/bcm2711-rpi-4-b.dtb into /boot/dtbs/5.15.0-1053-raspi/./bcm2711-rpi-4-b.dtb

Taking backup of bcm2711-rpi-4-b.dtb.

Installing new bcm2711-rpi-4-b.dtb.

flash-kernel: installing version 5.15.0-1053-raspi

Taking backup of vmlinuz.

Installing new vmlinuz.

Taking backup of initrd.img.

Installing new initrd.img.

Taking backup of uboot_rpi_arm64.bin.

Installing new uboot_rpi_arm64.bin.

Taking backup of uboot_rpi_4.bin.

Installing new uboot_rpi_4.bin.

Taking backup of uboot_rpi_3.bin.

Installing new uboot_rpi_3.bin.

Generating boot script u-boot image... done.

Taking backup of boot.scr.

Installing new boot.scr.

Taking backup of start4.elf.

Installing new start4.elf.

Taking backup of fixup4db.dat.

Installing new fixup4db.dat.

Taking backup of start.elf.

Installing new pca953x.dtbo.

(中略)

Taking backup of iqaudio-dacplus.dtbo.

Installing new iqaudio-dacplus.dtbo.

Taking backup of hifiberry-dac.dtbo.

Installing new hifiberry-dac.dtbo.

Taking backup of spi-rtc.dtbo.

Installing new spi-rtc.dtbo.

Taking backup of spi2-1cs.dtbo.

Installing new spi2-1cs.dtbo.

Taking backup of cap1106.dtbo.

Installing new cap1106.dtbo.

Taking backup of w5500.dtbo.

Installing new w5500.dtbo.

Taking backup of minipitft13.dtbo.

Installing new minipitft13.dtbo.

Taking backup of README.

Installing new README.

Scanning processes... Scanning processor microcode... Scanning linux images...

Running kernel seems to be up-to-date.

Failed to check for processor microcode upgrades.

No services need to be restarted.

No containers need to be restarted.

No user sessions are running outdated binaries.

No VM guests are running outdated hypervisor (qemu) binaries on this host.

インストール中に、全podがRunningになりました。見事!

$ kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-5fc7d6cf67-t24hh 1/1 Running 0 32m calico-node-dq6xq 1/1 Running 0 27m coredns-76f75df574-mzng7 1/1 Running 0 58m coredns-76f75df574-t265x 1/1 Running 0 58m etcd-k8s-ctrl1 1/1 Running 0 58m kube-apiserver-k8s-ctrl1 1/1 Running 0 58m kube-controller-manager-k8s-ctrl1 1/1 Running 1 (48m ago) 58m kube-proxy-d64kt 1/1 Running 0 58m kube-scheduler-k8s-ctrl1 1/1 Running 1 (47m ago) 58m

あとで linux-modules-extra-raspi でググっていたところ、こちらの記事もありました。

kubeadm join(残りのコントロールプレーン向け)

kubeadm join –control-plane の実行(k8s-ctrl2)

k8s-ctrl2 で実行します。

$ sudo kubeadm join 192.168.1.100:6443 --token hdie35.u9airq6ychkt8amq \ --discovery-token-ca-cert-hash sha256:cd58094931470815be7e0b791357ce4ca6907cb861858915e17752baa6cfc18a \ --control-plane --certificate-key cf6b62a26809ce3e4126c782badb0853e02d97dab46f90d7e895dd96ac1b3a1d

証明書が見つからない(削除された)場合

実行してみたところ下記のように言われてしまいました。 kubeadm init を実行してからしばらく経ってからkubeadm joinを行う場合に発生します。

[download-certs] Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace Secret "kubeadm-certs" was not found in the "kube-system" Namespace. This Secret might have expired. Please, run `kubeadm init phase upload-certs --upload-certs` on a control plane to generate a new one

upload-certs の再アップロード

手順に従い、k8s-ctrl1 で証明書を再アップロードします。

wurly@k8s-ctrl1:~$ sudo kubeadm init phase upload-certs --upload-certs [sudo] password for wurly: I0507 08:03:31.056618 32741 version.go:256] remote version is much newer: v1.30.0; falling back to: stable-1.29 [upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace [upload-certs] Using certificate key: 0626e7a06e87e81e158ac7d9d1bb1f4f8adbcd29b0ff34659de706ec533cf105

kubeadm join –control-plane の再実行(k8s-ctrl2)

k8s-ctrl2 で再度 kubeadm join を実行します。

$ sudo kubeadm join 192.168.1.100:6443 --token hdie35.u9airq6ychkt8amq \ --discovery-token-ca-cert-hash sha256:cd58094931470815be7e0b791357ce4ca6907cb861858915e17752baa6cfc18a \ --control-plane --certificate-key 0626e7a06e87e81e158ac7d9d1bb1f4f8adbcd29b0ff34659de706ec533cf105

今度は正常にjoinできました。

wurly@k8s-ctrl2:~$ sudo kubeadm join 192.168.1.100:6443 --token hdie35.u9airq6ychkt8amq \ --discovery-token-ca-cert-hash sha256:cd58094931470815be7e0b791357ce4ca6907cb861858915e17752baa6cfc18a \ --control-plane --certificate-key 0626e7a06e87e81e158ac7d9d1bb1f4f8adbcd29b0ff34659de706ec533cf105 [preflight] Running pre-flight checks [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml' [preflight] Running pre-flight checks before initializing the new control plane instance [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' W0507 08:05:48.572380 17476 checks.go:835] detected that the sandbox image "registry.k8s.io/pause:3.6" of the container runtime is inconsistent with that used by kubeadm. It is recommended that using "registry.k8s.io/pause:3.9" as the CRI sandbox image. [download-certs] Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace [download-certs] Saving the certificates to the folder: "/etc/kubernetes/pki" [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [k8s-ctrl2 localhost] and IPs [192.168.1.202 127.0.0.1 ::1] [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [k8s-ctrl2 localhost] and IPs [192.168.1.202 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [k8s-ctrl2 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.1.202 192.168.1.100] [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Valid certificates and keys now exist in "/etc/kubernetes/pki" [certs] Using the existing "sa" key [kubeconfig] Generating kubeconfig files [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" [control-plane] Creating static Pod manifest for "kube-scheduler" [check-etcd] Checking that the etcd cluster is healthy [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Starting the kubelet [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... [etcd] Announced new etcd member joining to the existing etcd cluster [etcd] Creating static Pod manifest for "etcd" {"level":"warn","ts":"2024-05-07T08:06:02.165095+0900","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0x400043f880/192.168.1.201:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"} {"level":"warn","ts":"2024-05-07T08:06:02.275197+0900","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0x400043f880/192.168.1.201:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"} {"level":"warn","ts":"2024-05-07T08:06:02.429338+0900","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0x400043f880/192.168.1.201:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"} {"level":"warn","ts":"2024-05-07T08:06:02.67635+0900","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0x400043f880/192.168.1.201:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"} {"level":"warn","ts":"2024-05-07T08:06:03.043254+0900","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0x400043f880/192.168.1.201:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"} {"level":"warn","ts":"2024-05-07T08:06:03.582796+0900","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0x400043f880/192.168.1.201:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"} {"level":"warn","ts":"2024-05-07T08:06:04.387198+0900","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0x400043f880/192.168.1.201:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"} {"level":"warn","ts":"2024-05-07T08:06:05.531451+0900","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0x400043f880/192.168.1.201:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"} [etcd] Waiting for the new etcd member to join the cluster. This can take up to 40s The 'update-status' phase is deprecated and will be removed in a future release. Currently it performs no operation [mark-control-plane] Marking the node k8s-ctrl2 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers] [mark-control-plane] Marking the node k8s-ctrl2 as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule] This node has joined the cluster and a new control plane instance was created: * Certificate signing request was sent to apiserver and approval was received. * The Kubelet was informed of the new secure connection details. * Control plane label and taint were applied to the new node. * The Kubernetes control plane instances scaled up. * A new etcd member was added to the local/stacked etcd cluster. To start administering your cluster from this node, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Run 'kubectl get nodes' to see this node join the cluster.

kube-ctrl2が追加されます。最初は STATUS が NotReady です。

$ k get node NAME STATUS ROLES AGE VERSION k8s-ctrl1 Ready control-plane 9h v1.29.4 k8s-ctrl2 NotReady <none> 2s v1.29.4

STATUS が Ready となり、追加が完了しました。

$ k get node NAME STATUS ROLES AGE VERSION k8s-ctrl1 Ready control-plane 9h v1.29.4 k8s-ctrl2 Ready control-plane 2m33s v1.29.4

kubeadm join –control-plane の実行(k8s-ctrl3)

次にk8s-ctrl3 でkubeadm joinを実行します。

kubeadm join –control-plane の実行結果(k8s-ctrl3)

wurly@k8s-ctrl3:~$ sudo kubeadm join 192.168.1.100:6443 --token hdie35.u9airq6ychkt8amq \ --discovery-token-ca-cert-hash sha256:cd58094931470815be7e0b791357ce4ca6907cb861858915e17752baa6cfc18a \ --control-plane --certificate-key 0626e7a06e87e81e158ac7d9d1bb1f4f8adbcd29b0ff34659de706ec533cf105 [sudo] password for wurly: [preflight] Running pre-flight checks [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml' [preflight] Running pre-flight checks before initializing the new control plane instance [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' W0507 08:08:39.636962 17354 checks.go:835] detected that the sandbox image "registry.k8s.io/pause:3.6" of the container runtime is in consistent with that used by kubeadm. It is recommended that using "registry.k8s.io/pause:3.9" as the CRI sandbox image. [download-certs] Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace [download-certs] Saving the certificates to the folder: "/etc/kubernetes/pki" [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "front-proxy-client" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [k8s-ctrl3 localhost] and IPs [192.168.1.203 127.0.0.1 ::1] [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [k8s-ctrl3 localhost] and IPs [192.168.1.203 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [k8s-ctrl3 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.1.203 192.168.1.100] [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Valid certificates and keys now exist in "/etc/kubernetes/pki" [certs] Using the existing "sa" key [kubeconfig] Generating kubeconfig files [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" [control-plane] Creating static Pod manifest for "kube-scheduler" [check-etcd] Checking that the etcd cluster is healthy [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Starting the kubelet [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... [etcd] Announced new etcd member joining to the existing etcd cluster [etcd] Creating static Pod manifest for "etcd" {"level":"warn","ts":"2024-05-07T08:09:11.367848+0900","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0x4000299dc0/192.168.1.201:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"} {"level":"warn","ts":"2024-05-07T08:09:11.521986+0900","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0x4000299dc0/192.168.1.201:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"} {"level":"warn","ts":"2024-05-07T08:09:11.684702+0900","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0x4000299dc0/192.168.1.201:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"} {"level":"warn","ts":"2024-05-07T08:09:11.922983+0900","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0x4000299dc0/192.168.1.201:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"} {"level":"warn","ts":"2024-05-07T08:09:12.268964+0900","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0x4000299dc0/192.168.1.201:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"} {"level":"warn","ts":"2024-05-07T08:09:12.797185+0900","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0x4000299dc0/192.168.1.201:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"} {"level":"warn","ts":"2024-05-07T08:09:13.575141+0900","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0x4000299dc0/192.168.1.201:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"} {"level":"warn","ts":"2024-05-07T08:09:14.750511+0900","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0x4000299dc0/192.168.1.201:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"} {"level":"warn","ts":"2024-05-07T08:09:16.463716+0900","logger":"etcd-client","caller":"v3@v3.5.10/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"etcd-endpoints://0x4000299dc0/192.168.1.201:2379","attempt":0,"error":"rpc error: code = FailedPrecondition desc = etcdserver: can only promote a learner member which is in sync with leader"} [etcd] Waiting for the new etcd member to join the cluster. This can take up to 40s The 'update-status' phase is deprecated and will be removed in a future release. Currently it performs no operation [mark-control-plane] Marking the node k8s-ctrl3 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers] [mark-control-plane] Marking the node k8s-ctrl3 as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule] This node has joined the cluster and a new control plane instance was created: * Certificate signing request was sent to apiserver and approval was received. * The Kubelet was informed of the new secure connection details. * Control plane label and taint were applied to the new node. * The Kubernetes control plane instances scaled up. * A new etcd member was added to the local/stacked etcd cluster. To start administering your cluster from this node, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Run 'kubectl get nodes' to see this node join the cluster.

kube-ctrl3が追加されます。最初は STATUS が NotReady です。

$ k get node NAME STATUS ROLES AGE VERSION k8s-ctrl1 Ready control-plane 9h v1.29.4 k8s-ctrl2 Ready control-plane 3m22s v1.29.4 k8s-ctrl3 NotReady control-plane 13s v1.29.4

STATUS が Ready となり、追加が完了しました。

$ k get node NAME STATUS ROLES AGE VERSION k8s-ctrl1 Ready control-plane 9h v1.29.4 k8s-ctrl2 Ready control-plane 4m55s v1.29.4 k8s-ctrl3 Ready control-plane 106s v1.29.4

これで、コントロールプレーンの構築は完了です。

(参考)コントロールプレーン構築完了時のpodの様子

podの状態は下記のようになりました。

calico-kube-controllers が一つしかない(k8s-ctrl1のみに存在する)ことが気になりました。

$ k get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-5fc7d6cf67-t24hh 1/1 Running 2 (73m ago) 9h kube-system calico-node-dq6xq 1/1 Running 2 (73m ago) 9h kube-system calico-node-gqkvn 1/1 Running 0 12m kube-system calico-node-tzthw 1/1 Running 0 15m kube-system coredns-76f75df574-mzng7 1/1 Running 2 (73m ago) 9h kube-system coredns-76f75df574-t265x 1/1 Running 2 (73m ago) 9h kube-system etcd-k8s-ctrl1 1/1 Running 2 (73m ago) 9h kube-system etcd-k8s-ctrl2 1/1 Running 0 15m kube-system etcd-k8s-ctrl3 1/1 Running 0 12m kube-system kube-apiserver-k8s-ctrl1 1/1 Running 2 (73m ago) 9h kube-system kube-apiserver-k8s-ctrl2 1/1 Running 0 15m kube-system kube-apiserver-k8s-ctrl3 1/1 Running 0 12m kube-system kube-controller-manager-k8s-ctrl1 1/1 Running 3 (73m ago) 9h kube-system kube-controller-manager-k8s-ctrl2 1/1 Running 0 15m kube-system kube-controller-manager-k8s-ctrl3 1/1 Running 0 12m kube-system kube-proxy-ctspc 1/1 Running 0 15m kube-system kube-proxy-d64kt 1/1 Running 2 (73m ago) 9h kube-system kube-proxy-tmqkh 1/1 Running 0 12m kube-system kube-scheduler-k8s-ctrl1 1/1 Running 4 (13m ago) 9h kube-system kube-scheduler-k8s-ctrl2 1/1 Running 0 15m kube-system kube-scheduler-k8s-ctrl3 1/1 Running 0 12m

(参考)k8s-ctrl1をネットワークから切り離したときの挙動

試しに、k8s-ctrl1をネットワークから切り離してみました。

すると、5分くらい経ってから k8s-ctrl3 に calico-kube-controllers が生成され、k8s-ctrl1 のcalico-kube-controllersは消滅していきます。

k get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES calico-kube-controllers-5fc7d6cf67-t24hh 1/1 Terminating 2 (108m ago) 10h 172.16.190.25 k8s-ctrl1 <none> <none> calico-kube-controllers-5fc7d6cf67-zhzvh 1/1 Running 0 29s 172.16.35.2 k8s-ctrl3 <none> <none> calico-node-dq6xq 1/1 Running 2 (108m ago) 9h 192.168.1.201 k8s-ctrl1 <none> <none> calico-node-gqkvn 1/1 Running 1 (7m14s ago) 47m 192.168.1.203 k8s-ctrl3 <none> <none> calico-node-tzthw 1/1 Running 0 50m 192.168.1.202 k8s-ctrl2 <none> <none> coredns-76f75df574-dc4fc 1/1 Running 0 29s 172.16.35.1 k8s-ctrl3 <none> <none> coredns-76f75df574-jsbp4 1/1 Running 0 29s 172.16.164.1 k8s-ctrl2 <none> <none> coredns-76f75df574-mzng7 1/1 Terminating 2 (108m ago) 10h 172.16.190.23 k8s-ctrl1 <none> <none> coredns-76f75df574-t265x 1/1 Terminating 2 (108m ago) 10h 172.16.190.24 k8s-ctrl1 <none> <none> etcd-k8s-ctrl1 1/1 Running 2 (108m ago) 10h 192.168.1.201 k8s-ctrl1 <none> <none> etcd-k8s-ctrl2 1/1 Running 0 50m 192.168.1.202 k8s-ctrl2 <none> <none> etcd-k8s-ctrl3 1/1 Running 1 (7m14s ago) 47m 192.168.1.203 k8s-ctrl3 <none> <none> kube-apiserver-k8s-ctrl1 1/1 Running 2 (108m ago) 10h 192.168.1.201 k8s-ctrl1 <none> <none> kube-apiserver-k8s-ctrl2 1/1 Running 0 50m 192.168.1.202 k8s-ctrl2 <none> <none> kube-apiserver-k8s-ctrl3 1/1 Running 1 (7m14s ago) 47m 192.168.1.203 k8s-ctrl3 <none> <none> kube-controller-manager-k8s-ctrl1 1/1 Running 3 (108m ago) 10h 192.168.1.201 k8s-ctrl1 <none> <none> kube-controller-manager-k8s-ctrl2 1/1 Running 0 50m 192.168.1.202 k8s-ctrl2 <none> <none> kube-controller-manager-k8s-ctrl3 1/1 Running 1 (7m14s ago) 47m 192.168.1.203 k8s-ctrl3 <none> <none> kube-proxy-ctspc 1/1 Running 0 50m 192.168.1.202 k8s-ctrl2 <none> <none> kube-proxy-d64kt 1/1 Running 2 (108m ago) 10h 192.168.1.201 k8s-ctrl1 <none> <none> kube-proxy-tmqkh 1/1 Running 1 (7m14s ago) 47m 192.168.1.203 k8s-ctrl3 <none> <none> kube-scheduler-k8s-ctrl1 1/1 Running 4 (48m ago) 10h 192.168.1.201 k8s-ctrl1 <none> <none> kube-scheduler-k8s-ctrl2 1/1 Running 0 50m 192.168.1.202 k8s-ctrl2 <none> <none> kube-scheduler-k8s-ctrl3 1/1 Running 1 (7m14s ago) 47m 192.168.1.203 k8s-ctrl3 <none> <none>

kubeadm join(ワーカーノードの参加)

(参考)kubeadm joinの実行前の状態

kubeadm joinを行う前にpodの状態を確認しておきます。 下記はコントロールプレーンのみ存在している状態です。

$ k get pod NAME READY STATUS RESTARTS AGE calico-kube-controllers-5fc7d6cf67-zhzvh 1/1 Running 1 (5m34s ago) 4d2h calico-node-dq6xq 1/1 Running 3 (5m26s ago) 4d12h calico-node-gqkvn 1/1 Running 2 (5m34s ago) 4d2h calico-node-tzthw 1/1 Running 1 (5m34s ago) 4d2h coredns-76f75df574-dc4fc 1/1 Running 1 (5m34s ago) 4d2h coredns-76f75df574-jsbp4 1/1 Running 1 (5m34s ago) 4d2h etcd-k8s-ctrl1 1/1 Running 3 (5m26s ago) 4d12h etcd-k8s-ctrl2 1/1 Running 1 (5m34s ago) 4d2h etcd-k8s-ctrl3 1/1 Running 2 (5m34s ago) 4d2h kube-apiserver-k8s-ctrl1 1/1 Running 3 (5m26s ago) 4d12h kube-apiserver-k8s-ctrl2 1/1 Running 1 (5m34s ago) 4d2h kube-apiserver-k8s-ctrl3 1/1 Running 2 (5m35s ago) 4d2h kube-controller-manager-k8s-ctrl1 1/1 Running 4 (5m26s ago) 4d12h kube-controller-manager-k8s-ctrl2 1/1 Running 1 (5m34s ago) 4d2h kube-controller-manager-k8s-ctrl3 1/1 Running 2 (5m34s ago) 4d2h kube-proxy-ctspc 1/1 Running 1 (5m34s ago) 4d2h kube-proxy-d64kt 1/1 Running 3 (5m26s ago) 4d12h kube-proxy-tmqkh 1/1 Running 2 (5m34s ago) 4d2h kube-scheduler-k8s-ctrl1 1/1 Running 5 (5m26s ago) 4d12h kube-scheduler-k8s-ctrl2 1/1 Running 1 (5m34s ago) 4d2h kube-scheduler-k8s-ctrl3 1/1 Running 2 (5m34s ago) 4d2h $ k get nodes NAME STATUS ROLES AGE VERSION k8s-ctrl1 Ready control-plane 4d12h v1.29.4 k8s-ctrl2 Ready control-plane 4d2h v1.29.4 k8s-ctrl3 Ready control-plane 4d2h v1.29.4

kubeadm joinの実行

kubeadm joinを実行します。

sudo kubeadm join 192.168.1.100:6443 --token hdie35.u9airq6ychkt8amq \

--discovery-token-ca-cert-hash sha256:cd58094931470815be7e0b791357ce4ca6907cb861858915e17752baa6cfc18a --v=5

kubeadm joinの結果

実行してみると、下記のメッセージから先に進むことができません。

wurly@k8s-worker1:~$ sudo kubeadm join 192.168.1.100:6443 --token hdie35.u9airq6ychkt8amq \ --discovery-token-ca-cert-hash sha256:cd58094931470815be7e0b791357ce4ca6907cb861858915e17752baa6 cfc18a --v=5 [sudo] password for wurly: I0511 02:25:53.304043 3224 join.go:413] [preflight] found NodeName empty; using OS hostname as NodeName I0511 02:25:53.306686 3224 initconfiguration.go:122] detected and using CRI socket: unix:///var/run/containerd/containerd.sock [preflight] Running pre-flight checks I0511 02:25:53.306741 3224 preflight.go:93] [preflight] Running general checks I0511 02:25:53.306785 3224 checks.go:280] validating the existence of file /etc/kubernetes/kubelet.conf I0511 02:25:53.306794 3224 checks.go:280] validating the existence of file /etc/kubernetes/bootstrap-kubelet.conf I0511 02:25:53.306802 3224 checks.go:104] validating the container runtime I0511 02:25:53.326536 3224 checks.go:639] validating whether swap is enabled or not I0511 02:25:53.326595 3224 checks.go:370] validating the presence of executable crictl I0511 02:25:53.326622 3224 checks.go:370] validating the presence of executable conntrack I0511 02:25:53.326640 3224 checks.go:370] validating the presence of executable ip I0511 02:25:53.326660 3224 checks.go:370] validating the presence of executable iptables I0511 02:25:53.326682 3224 checks.go:370] validating the presence of executable mount I0511 02:25:53.326703 3224 checks.go:370] validating the presence of executable nsenter I0511 02:25:53.326723 3224 checks.go:370] validating the presence of executable ebtables I0511 02:25:53.326744 3224 checks.go:370] validating the presence of executable ethtool I0511 02:25:53.326761 3224 checks.go:370] validating the presence of executable socat I0511 02:25:53.326781 3224 checks.go:370] validating the presence of executable tc I0511 02:25:53.326795 3224 checks.go:370] validating the presence of executable touch I0511 02:25:53.326811 3224 checks.go:516] running all checks I0511 02:25:53.336882 3224 checks.go:401] checking whether the given node name is valid and reachable using net.LookupHost I0511 02:25:53.337071 3224 checks.go:605] validating kubelet version I0511 02:25:53.382237 3224 checks.go:130] validating if the "kubelet" service is enabled and active I0511 02:25:53.390207 3224 checks.go:203] validating availability of port 10250 I0511 02:25:53.390336 3224 checks.go:280] validating the existence of file /etc/kubernetes/pki/ca.crt I0511 02:25:53.390346 3224 checks.go:430] validating if the connectivity type is via proxy or direct I0511 02:25:53.390367 3224 checks.go:329] validating the contents of file /proc/sys/net/bridge/bridge-nf-call-iptables I0511 02:25:53.390389 3224 checks.go:329] validating the contents of file /proc/sys/net/ipv4/ip_forward I0511 02:25:53.390405 3224 join.go:532] [preflight] Discovering cluster-info I0511 02:25:53.390417 3224 token.go:80] [discovery] Created cluster-info discovery client, requesting info from "192.168.1.100:6443" I0511 02:25:53.416226 3224 token.go:223] [discovery] The cluster-info ConfigMap does not yet contain a JWS signature for token ID "hdie35", will try again I0511 02:25:58.596077 3224 token.go:223] [discovery] The cluster-info ConfigMap does not yet contain a JWS signature for token ID "hdie35", will try again (中略) I0511 02:28:58.158711 3224 token.go:223] [discovery] The cluster-info ConfigMap does not yet contain a JWS signature for token ID "hdie35", will try again I0511 02:29:03.706321 3224 token.go:223] [discovery] The cluster-info ConfigMap does not yet contain a JWS signature for token ID "hdie35", will try again

トークンの期限切れ

下記のメッセージが問題があることを示していると思われます。

The cluster-info ConfigMap does not yet contain a JWS signature for token ID “hdie35”, will try again

上記によるとtokenが期限切れになっているようです。 私の場合、数日にわたってクラスター構築の作業を行っているため、このような状況になっています。 (一気に作業を行う場合にはこのような問題にはならないはずです) トークンを再作成します。

トークンの再生成

wurly@k8s-ctrl1:~$ kubeadm token list wurly@k8s-ctrl1:~$ sudo kubeadm token list [sudo] password for wurly: wurly@k8s-ctrl1:~$ sudo kubeadm token create d8gufx.xhgtb45qps7h7yhv wurly@k8s-ctrl1:~$ sudo kubeadm token list TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS d8gufx.xhgtb45qps7h7yhv 23h 2024-05-12T02:29:57Z authentication,signing <none> system:bootstrappers:kubeadm:default-node-token

kubeadm joinの再実行

指定するtokenを変更して再実行します。

sudo kubeadm join 192.168.1.100:6443 --token d8gufx.xhgtb45qps7h7yhv \

--discovery-token-ca-cert-hash sha256:cd58094931470815be7e0b791357ce4ca6907cb861858915e17752baa6cfc18a --v=5

kubeadm joinの再実行結果

下記の通り、正常にクラスターに参加できました。

wurly@k8s-worker1:~$ sudo kubeadm join 192.168.1.100:6443 --token d8gufx.xhgtb45qps7h7yhv \ --discovery-token-ca-cert-hash sha256:cd58094931470815be7e0b791357ce4ca6907cb861858915e17752baa6cfc18a --v=5 I0511 02:30:54.365840 3626 join.go:413] [preflight] found NodeName empty; using OS hostname as NodeName I0511 02:30:54.365949 3626 initconfiguration.go:122] detected and using CRI socket: unix:///var/run/containerd/containerd.sock [preflight] Running pre-flight checks I0511 02:30:54.365999 3626 preflight.go:93] [preflight] Running general checks I0511 02:30:54.366035 3626 checks.go:280] validating the existence of file /etc/kubernetes/kubelet.conf I0511 02:30:54.366048 3626 checks.go:280] validating the existence of file /etc/kubernetes/bootstrap-kubelet.conf I0511 02:30:54.366057 3626 checks.go:104] validating the container runtime I0511 02:30:54.382819 3626 checks.go:639] validating whether swap is enabled or not I0511 02:30:54.382869 3626 checks.go:370] validating the presence of executable crictl I0511 02:30:54.382893 3626 checks.go:370] validating the presence of executable conntrack I0511 02:30:54.382909 3626 checks.go:370] validating the presence of executable ip I0511 02:30:54.382927 3626 checks.go:370] validating the presence of executable iptables I0511 02:30:54.382945 3626 checks.go:370] validating the presence of executable mount I0511 02:30:54.382962 3626 checks.go:370] validating the presence of executable nsenter I0511 02:30:54.382980 3626 checks.go:370] validating the presence of executable ebtables I0511 02:30:54.383006 3626 checks.go:370] validating the presence of executable ethtool I0511 02:30:54.383021 3626 checks.go:370] validating the presence of executable socat I0511 02:30:54.383036 3626 checks.go:370] validating the presence of executable tc I0511 02:30:54.383054 3626 checks.go:370] validating the presence of executable touch I0511 02:30:54.383077 3626 checks.go:516] running all checks I0511 02:30:54.394226 3626 checks.go:401] checking whether the given node name is valid and reachable using net.LookupHost I0511 02:30:54.394386 3626 checks.go:605] validating kubelet version I0511 02:30:54.429461 3626 checks.go:130] validating if the "kubelet" service is enabled and active I0511 02:30:54.436446 3626 checks.go:203] validating availability of port 10250 I0511 02:30:54.436570 3626 checks.go:280] validating the existence of file /etc/kubernetes/pki/ca.crt I0511 02:30:54.436582 3626 checks.go:430] validating if the connectivity type is via proxy or direct I0511 02:30:54.436609 3626 checks.go:329] validating the contents of file /proc/sys/net/bridge/bridge-nf-call-iptables I0511 02:30:54.436642 3626 checks.go:329] validating the contents of file /proc/sys/net/ipv4/ip_forward I0511 02:30:54.436666 3626 join.go:532] [preflight] Discovering cluster-info I0511 02:30:54.436685 3626 token.go:80] [discovery] Created cluster-info discovery client, requesting info from "192.168.1.100:6443" I0511 02:30:54.466412 3626 token.go:118] [discovery] Requesting info from "192.168.1.100:6443" again to validate TLS against the pinned public key I0511 02:30:54.498645 3626 token.go:135] [discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "192.168.1.100:6443" I0511 02:30:54.498675 3626 discovery.go:52] [discovery] Using provided TLSBootstrapToken as authentication credentials for the join process I0511 02:30:54.498692 3626 join.go:546] [preflight] Fetching init configuration I0511 02:30:54.498702 3626 join.go:592] [preflight] Retrieving KubeConfig objects [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml' I0511 02:30:54.545089 3626 kubeproxy.go:55] attempting to download the KubeProxyConfiguration from ConfigMap "kube-proxy" I0511 02:30:54.563297 3626 kubelet.go:74] attempting to download the KubeletConfiguration from ConfigMap "kubelet-config" I0511 02:30:54.579250 3626 initconfiguration.go:114] skip CRI socket detection, fill with the default CRI socket unix:///var/run/containerd/containerd.sock I0511 02:30:54.580713 3626 interface.go:432] Looking for default routes with IPv4 addresses I0511 02:30:54.580750 3626 interface.go:437] Default route transits interface "eno0" I0511 02:30:54.581417 3626 interface.go:209] Interface eno0 is up I0511 02:30:54.581560 3626 interface.go:257] Interface "eno0" has 3 addresses :[192.168.1.211/24 240d:1a:1d8:a600:b6a9:fcff:fe21:8100/64 fe80::b6a9:fcff:fe21:8100/64]. I0511 02:30:54.581610 3626 interface.go:224] Checking addr 192.168.1.211/24. I0511 02:30:54.581636 3626 interface.go:231] IP found 192.168.1.211 I0511 02:30:54.581681 3626 interface.go:263] Found valid IPv4 address 192.168.1.211 for interface "eno0". I0511 02:30:54.581732 3626 interface.go:443] Found active IP 192.168.1.211 I0511 02:30:54.590899 3626 preflight.go:104] [preflight] Running configuration dependant checks I0511 02:30:54.590937 3626 controlplaneprepare.go:225] [download-certs] Skipping certs download I0511 02:30:54.590973 3626 kubelet.go:121] [kubelet-start] writing bootstrap kubelet config file at /etc/kubernetes/bootstrap-kubelet.conf I0511 02:30:54.593788 3626 kubelet.go:136] [kubelet-start] writing CA certificate at /etc/kubernetes/pki/ca.crt I0511 02:30:54.595514 3626 kubelet.go:157] [kubelet-start] Checking for an existing Node in the cluster with name "k8s-worker1" and status "Ready" I0511 02:30:54.608006 3626 kubelet.go:172] [kubelet-start] Stopping the kubelet [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Starting the kubelet [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... I0511 02:30:55.941393 3626 cert_rotation.go:137] Starting client certificate rotation controller I0511 02:30:55.943223 3626 kubelet.go:220] [kubelet-start] preserving the crisocket information for the node I0511 02:30:55.943289 3626 patchnode.go:31] [patchnode] Uploading the CRI Socket information "unix:///var/run/containerd/containerd.sock" to the Node API object "k8s-worker1" as an annotation This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

(参考)ワーカーのクラスター参加後のpodの状態

ワーカーがクラスターに加わり、最初は NotReadyです。

$ k get nodes NAME STATUS ROLES AGE VERSION k8s-ctrl1 Ready control-plane 4d13h v1.29.4 k8s-ctrl2 Ready control-plane 4d3h v1.29.4 k8s-ctrl3 Ready control-plane 4d3h v1.29.4 k8s-worker1 NotReady <none> 37s v1.29.4

Readyになりました。

$ k get nodes NAME STATUS ROLES AGE VERSION k8s-ctrl1 Ready control-plane 4d13h v1.29.4 k8s-ctrl2 Ready control-plane 4d3h v1.29.4 k8s-ctrl3 Ready control-plane 4d3h v1.29.4 k8s-worker1 Ready <none> 93s v1.29.4

podの状況です。

calico と kube-proxy がワーカー上で動いていることがわかります。

$ k get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES calico-kube-controllers-5fc7d6cf67-zhzvh 1/1 Running 1 (34m ago) 4d2h 172.16.35.3 k8s-ctrl3 <none> <none> calico-node-dq6xq 1/1 Running 3 (33m ago) 4d12h 192.168.1.201 k8s-ctrl1 <none> <none> calico-node-gqkvn 1/1 Running 2 (34m ago) 4d3h 192.168.1.203 k8s-ctrl3 <none> <none> calico-node-qv8g9 1/1 Running 0 114s 192.168.1.211 k8s-worker1 <none> <none> calico-node-tzthw 1/1 Running 1 (34m ago) 4d3h 192.168.1.202 k8s-ctrl2 <none> <none> coredns-76f75df574-dc4fc 1/1 Running 1 (34m ago) 4d2h 172.16.35.4 k8s-ctrl3 <none> <none> coredns-76f75df574-jsbp4 1/1 Running 1 (34m ago) 4d2h 172.16.164.2 k8s-ctrl2 <none> <none> etcd-k8s-ctrl1 1/1 Running 3 (33m ago) 4d13h 192.168.1.201 k8s-ctrl1 <none> <none> etcd-k8s-ctrl2 1/1 Running 1 (34m ago) 4d3h 192.168.1.202 k8s-ctrl2 <none> <none> etcd-k8s-ctrl3 1/1 Running 2 (34m ago) 4d3h 192.168.1.203 k8s-ctrl3 <none> <none> kube-apiserver-k8s-ctrl1 1/1 Running 3 (33m ago) 4d13h 192.168.1.201 k8s-ctrl1 <none> <none> kube-apiserver-k8s-ctrl2 1/1 Running 1 (34m ago) 4d3h 192.168.1.202 k8s-ctrl2 <none> <none> kube-apiserver-k8s-ctrl3 1/1 Running 2 (34m ago) 4d3h 192.168.1.203 k8s-ctrl3 <none> <none> kube-controller-manager-k8s-ctrl1 1/1 Running 4 (33m ago) 4d13h 192.168.1.201 k8s-ctrl1 <none> <none> kube-controller-manager-k8s-ctrl2 1/1 Running 1 (34m ago) 4d3h 192.168.1.202 k8s-ctrl2 <none> <none> kube-controller-manager-k8s-ctrl3 1/1 Running 2 (34m ago) 4d3h 192.168.1.203 k8s-ctrl3 <none> <none> kube-proxy-ctspc 1/1 Running 1 (34m ago) 4d3h 192.168.1.202 k8s-ctrl2 <none> <none> kube-proxy-d64kt 1/1 Running 3 (33m ago) 4d13h 192.168.1.201 k8s-ctrl1 <none> <none> kube-proxy-h4zjz 1/1 Running 0 114s 192.168.1.211 k8s-worker1 <none> <none> kube-proxy-tmqkh 1/1 Running 2 (34m ago) 4d3h 192.168.1.203 k8s-ctrl3 <none> <none> kube-scheduler-k8s-ctrl1 1/1 Running 5 (33m ago) 4d13h 192.168.1.201 k8s-ctrl1 <none> <none> kube-scheduler-k8s-ctrl2 1/1 Running 1 (34m ago) 4d3h 192.168.1.202 k8s-ctrl2 <none> <none> kube-scheduler-k8s-ctrl3 1/1 Running 2 (34m ago) 4d3h 192.168.1.203 k8s-ctrl3 <none> <none>

おわりに

このように、3台のラズパイと1台のPC、OpenWrtをインストールした1台の小型ルーターを用いて、HA(High availability) おうちKubernetesクラスターの構築ができました。

HA構成と言っても考えられる構成は様々であり、構成を考える上でロードバランサー等を理解することやハードウェアの準備など、それなりに時間がかかりましたが、これらを通じてKubernetesに対する理解が深まったと感じています。

また、今回、技術のキャッチアッププロセスとして「3台のラズパイによるクラスター構築」 → 「3台のラズパイ + 1台のPC + 小型ルーター によるクラスター構築」の順で おうちKubernetesを構築を進める方法についてまとめることができたと考えています。

参考

- Creating Highly Available Clusters with kubeadm | Kubernetes

- Creating Highly Available Clusters with kubeadm – Google Search

- Setting up Kubernetes High Availability Cluster – Building and testing a multiple masters Part II – Unitas Global

- Setting up Kubernetes High Availability Cluster – Building and testing a multiple masters Part I – Unitas Global

- 多機能プロクシサーバー「HAProxy」のさまざまな設定例 | さくらのナレッジ

- Managed Kubernetesサービス開発者の自宅k8sクラスタ全容